Welcome to the epicenter of innovation in LLM Agents—where builders, developers, researchers, entrepreneurs, and AI enthusiasts meet to redefine the future of LLM Agents. The AgentX – LLM Agents MOOC Competition, hosted by Berkeley RDI in conjunction with the Advanced LLM Agents MOOC, is calling for trailblazers ready to push the boundaries of AI and Agents. Whether you’re exploring uncharted research frontiers or building the next big AI/Agents startup, AgentX is your launchpad to make a lasting impact. Join us to build breakthrough LLM Agents, forge collaborative partnerships, and be part of a vibrant community shaping the future of AI.

The AgentX Competition is open to the public and will be held primarily virtually, culminating in an in-person Demo Day at the Agentic AI Summit this August at UC Berkeley. Finalists will showcase their prototypes to an audience of top AI companies, leading VCs and tech industry leaders—offering unprecedented opportunities to forge strategic partnerships, connect with investors, and engage with potential customers. Whether you're seeking funding or corporate partnerships, or expanding your market, Demo Day is your platform to shine.

The AgentX Competition is designed to have two tracks:

Entrepreneurship Track:

- Focus: Develop startup ideas (including MVP) leveraging LLM Agents, such as developer tools, enterprise software solutions, and consumer applications.

- Perfect For: Builders, entrepreneurs, startup teams, etc.

- Submission Requirement: Working prototypes (e.g. product demos) and a pitch deck (incl. detailed product development and business plan)

- View detailed submission requirements here

Research Track:

- Focus: Explore the frontiers of LLM Agents technology. Potential research topics include but are not limited to Agent Architecture, AI Safety & Alignment, Reasoning & Planning, Benchmarks & Evaluation, Fundamental Capabilities, and Multi-agent & Decentralization.

- Perfect For: Students, developers, academics, and research teams

- Submission Requirement: Research papers, prototypes or proof-of-concept demos

- View detailed submission requirements here

For AgentX discussion, please join the AgentX channel at the LLM Agents Discord. For more information and to answer frequently asked questions, please refer to our ongoing AgentX FAQ.

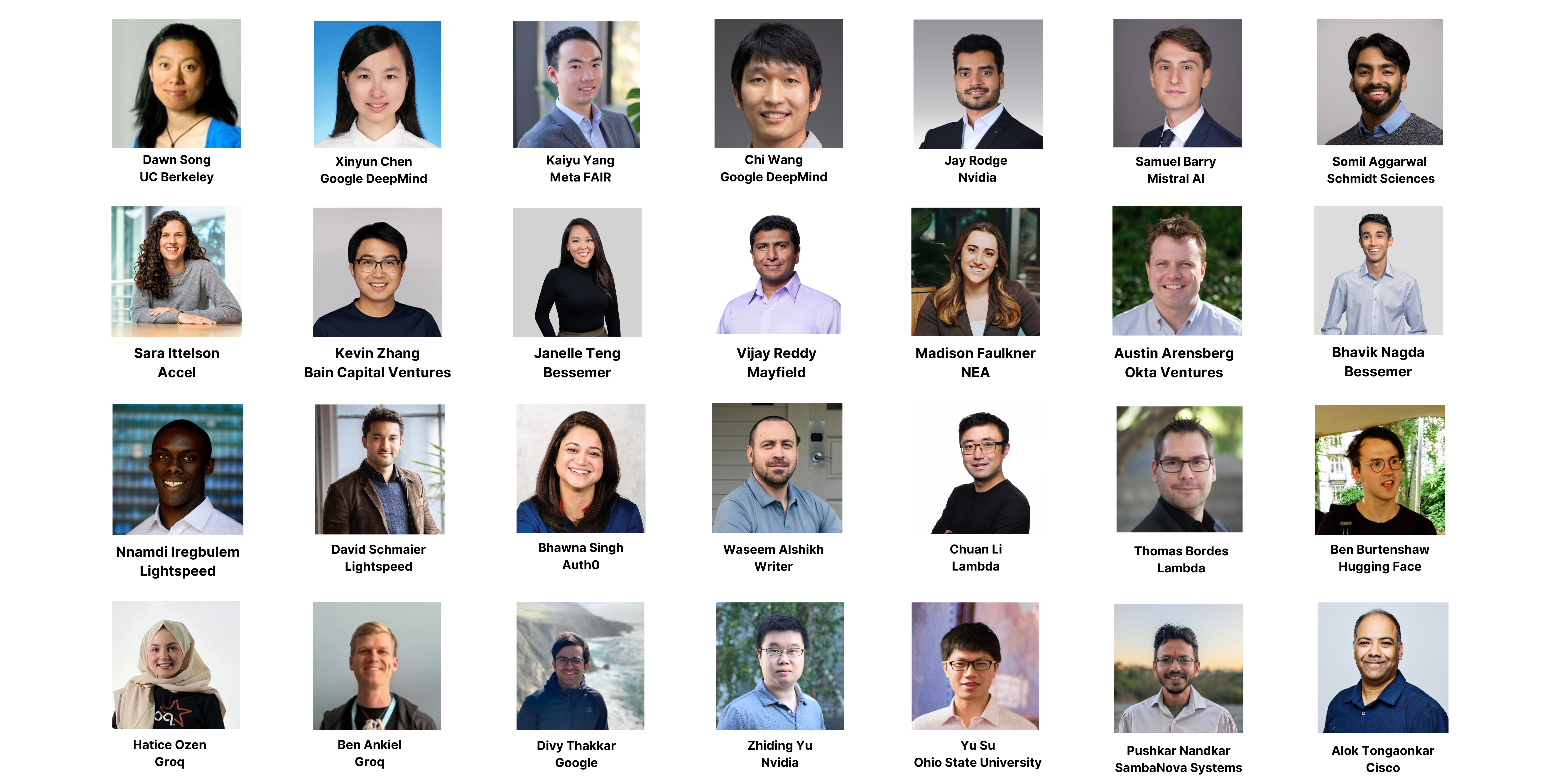

PARTNERS & SPONSORS

PRIZES & RESOURCES

- Up to $40,000 in cash awards for winners of the Entrepreneurship Track

- Up to $30k in credits to be awarded to winning teams from both tracks

- All members of winning teams from each track (Entrepreneurship and Research) will receive a 6-month HF PRO subscription

- Up to $5,000/$3,000/$2,000 in HF credits will be awarded to the top 3 teams in the Entrepreneurship Track

- Up to $1,000/$500/$300 in credits will be awarded to the top 3 teams in each track (Entrepreneurship and Research)

- Up to $5,000/$3,000/$2,000 in gift cards will be awarded to the top 3 teams in the Entrepreneurship Track that have used Auth0.ai in their product/project building

- Up to $3,000/$1,500/$500 in credits will be awarded to the top 3 teams in each track (Entrepreneurship and Research)

- Up to $10k in credits to be awarded to winning teams from both tracks

- Up to $12,000 in prizes ($10K Writer credits + $2K gift cards) for top two Research Track teams focusing on agent architecture and multi-agent systems

- Up to $10k in gift cards to be awarded to winning teams with AI Safety focus from both tracks

- The pricing / rate limits page (with the free tier info) can be found here.

- Go to Google AI Studio

- Login with your Google account.

- Create an API key.

- Read the Gemini API docs

- 15 RPM (requests per minute)

- 1 million TPM (tokens per minute)

- 1,500 RPD (requests per day)

Everyone can use the Gemini API and Google AI Studio — Gemini 2.0 Flash, free of charge & no credit card needed, and rate limits are high

Instructions to start developing with the Gemini API, with no credit card required

Key info:

Docs

- Gemini API Docs

- Participants can also get started with the Gemini API Cookbook, if teams would like to dive right into code. There you will find many notebooks with complete code demonstrating how to use the Gemini API.

- $100 serverless API credits for Inference to every individual participant

- $400 GPU credits per team to the select 50 teams

- $100 HF credits per team to the select 100 teams. HF credit can be used towards Hugging Face's paid services, e.g. Inference Endpoints and building Spaces (ML Apps) with GPUs. Credits can also be used for subscriptions to PRO and Enterprise

- $100 Groq API token credits per team to the select 100 teams

- $25 coupons (e.g. API credits) per team to the select 50 teams

- For careers opportunities with Okta/Auth0, please visit https://www.okta.com/company/careers/

AGENTX FINALISTS

Team Raycaster

About: Raycaster is an AI-native workspace for life science operations. Our AI agents are deployed across commercial, R&D,

clinical, and legal teams through ambient agent swarms—specialized AI that work continuously to monitor patents, publications, clinical trials, and competitive intelligence. Unlike traditional AI tools, our agents proactively surface insights, learn from usage patterns to eliminate repetitive work, and maintain zero-hallucination through verifiable citations, enabling teams to compress months of research into hours while ensuring 100% regulatory compliance.🥇 1st place - Auth0 Prize (Entrepreneurship Track)

Team Tradestack

About: AI-powered office teams for trade contractors.

Team WeKruit

About: Training: WeKruit offers candidates on-demand AI mock interviews with personalized feedback to sharpen their

communication and problem-solving skills.Hiring: WeKruit automates applicant screening and filtering through customizable interview/evaluation workflows, delivering ranked candidate pools.

Team Minimist

About: We are making second hand the default choice. Our team is building technical solutions for various points in the value chain of

the re-commerce sector.Team CrisPro

About: CrisPRO is an AI-driven agentic EMR and computational platform designed to facilitate the end-to-end design, simulation, and

validation of CRISPR-based therapeutic and prophylactic strategies. CrisPro integrates advanced AI orchestration, proprietary Digital Twin simulations, and context-aware therapeutic modules to enhance precision, reduce failure rates, and accelerate clinical translation.CrisPRO is positioned to revolutionize CRISPR-based therapeutic development by providing an end-to-end solution that integrates data processing, AI-driven decision-making, and predictive modeling to de-risk and expedite the path from discovery to clinical application.

Team Ennube.ai

About: Turnkey AI Agents for your CRM.

Team NovaSky

Abstract: Most existing reinforcement learning (RL) frameworks are tailored for stateless, short-horizon interactions, such as

search-augmented reasoning or isolated code execution. However, real-world software engineering tasks, exemplified by benchmarks like SWE-Bench, require agents to operate in stateful, dynamic environments with long-horizon dependencies and delayed feedback. These settings introduce new challenges in both infrastructure design and learning algorithms.We present SkyRL, the first framework to enable end-to-end online RL training of large language model (LLM) agents} on complex, multi-turn software engineering tasks. Built on top of VeRL and OpenHands, SkyRL provides asynchronous rollout execution, scalable environment orchestration, and pipelined multi-stage execution. Leveraging these components, we train the agents and demonstrate promising performance gains on SWE-Bench-Verified using only around 300 training examples across multiple model lines, showcasing the huge potential for end-to-end RL training for such a long-horizon task.

Team Agent-VBench

Abstract: Deep reasoning is fundamental for solving complex tasks, especially in vision- centric scenarios that demand sequential,

multimodal understanding. However, existing benchmarks typically evaluate agents with fully synthetic, single-turn queries, limited visual modalities, and lack a framework to assess reasoning quality over multiple steps as required in real-world settings. To address this, we introduce Agent-VBench, a large-scale benchmark for evaluating vision-centric agents’ multi- step and deep reasoning capabilities in real-world, multimodal settings. Agent- VBench features 828 agentic tasks with authentic visual contexts, including images, multi-image comparisons, videos, and instructional text. These tasks span six major agentic environments: general visual reasoning, web browsing, security and surveillance, autonomous driving, sports, and math reasoning. Our benchmark requires agents to integrate tool use with explicit, stepwise decision-making in these diverse settings. In addition, we propose a fine-grained, step-level evaluation framework that assesses the correctness and logical coherence of each reasoning step and the effectiveness of tool usage throughout the task. Our results reveal that even the best-performing models, including GPT, Gemini, and Qwen families, struggle to solve multi-step vision tasks, achieving less than 50% full-chain success. These findings highlight key bottlenecks in current LMM reasoning and tool-use capabilities and identify future research directions in vision-centric agentic reasoning models.Team Hoosiers

Abstract: In this project, we propose Maris, a pioneering solution designed to address sensitive data disclosure threats in Multi-Agent

Collaborative Systems (MACS) by enabling privacy controls at the system development level. Maris introduces a privacy-enhanced development paradigm for secure agent collaboration, and its effectiveness has been demonstrated in three different MACS, including a hospital outreach MACS, an airline customer support chatbot system, and Optiguide (Microsoft’s supply chain optimization system). Additionally, we propose a novel MACS privacy assessment framework to evaluate various tasks and threat scenarios.Team CVE-bench

Abstract: Large language model (LLM) agents are increasingly capable of autonomously conducting cyberattacks, posing significant

threats to existing applications. This growing risk highlights the urgent need for a real-world benchmark to evaluate the ability of LLM agents to exploit web application vulnerabilities. However, existing benchmarks fall short as they are limited to abstracted Capture the Flag competitions or lack comprehensive coverage. Building a benchmark for real-world vulnerabilities involves both specialized expertise to reproduce exploits and a systematic approach to evaluating unpredictable threats. To address this challenge, we introduce CVE-Bench, a real-world cybersecurity benchmark based on critical-severity Common Vulnerabilities and Exposures. In CVE-Bench, we design a sandbox framework that enables LLM agents to exploit vulnerable web applications in scenarios that mimic real-world conditions, while also providing effective evaluation of their exploits. Our evaluation shows that the state-of-the-art agent framework can exploit up to 13% of the vulnerabilities.Team MedPAIR

Abstract: Large Language Models (LLMs) have demonstrated remarkable performance on various medical question-answering (QA)

benchmarks, including standardized medical exams. However, correct answers alone do not ensure correct logic, and models may reach accurate conclusions through flawed processes. In this project, we introduce the MedPAIR (Medical Dataset Comparing Physicians and AI Relevance Estimation and Question Answering) dataset to evaluate how physician trainees and LLMs prioritize relevant information when answering QA questions. We obtain annotations on 1,300 QA pairs from 36 physician trainees, labeling each sentence within the question components for relevance. We compare these relevance estimates to those for LLMs, and further evaluate the impact of these "relevant" subsets on downstream task performance for both physician trainees and LLMs. We find that LLMs are frequently not aligned with the content relevance estimates of physician trainees. After filtering out physician trainee-labeled irrelevant sentences, accuracy improves for both the trainees and the LLMs. All LLM and physician trainee-labeled data are available at: http://medpair.csail.mit.edu/.Team CoP

Abstract: Recent advances in Large Language Models (LLMs) have spurred transformative applications in various domains, ranging from

open-source to proprietary LLMs. However, jailbreak attacks, which aim to break safety alignment and user compliance by tricking the target LLMs into answering harmful and risky responses, are becoming an urgent concern. The practice of red-teaming for LLMs is to proactively explore potential risks and error-prone instances before the release of frontier AI technology. This paper proposes an agentic workflow to automate and scale the red-teaming process of LLMs through the Composition-of-Principles (CoP) framework, where human users provide a set of red-teaming principles as instructions to an AI agent to automatically orchestrate effective red-teaming strategies and generate jailbreak prompts. Distinct from existing red-teaming methods, our CoP framework provides a unified and extensible framework to encompass and orchestrate human-provided red-teaming principles to enable the automated discovery of new red-teaming strategies. When tested against leading LLMs, CoP reveals unprecedented safety risks by finding novel jailbreak prompts and improving the best-known single-turn attack success rate by up to 19.0 times.Team ANS

Abstract: This project presents a working demonstration of the Agent Name Service (ANS), a novel architecture designed for the

secure discovery and interoperability of AI agents. Addressing the growing need for a robust public agent directory, ANS leverages concepts from DNS and Public Key Infrastructure (PKI) to establish verifiable agent identities. This prototype showcases key ANS functionalities, including agent registration with structured naming conventions, a simulated PKI for certificate issuance, and a lookup mechanism enabling discovery based on various agent attributes.The implemented system allows users to register AI agents, simulating the generation of a CA-signed certificate. It further enables the lookup of registered agents, returning their details along with a mock secure response. Basic agent lifecycle management is also demonstrated through functionalities for agent registration renewal and revocation. Underpinning these features is a persistent SQLite database for storing agent records and Zod schema validation for ensuring structured and reliable communication.

This demonstration provides a tangible illustration of the core principles behind the Agent Name Service, highlighting its potential to facilitate secure discovery and management in future multi-agent systems. By showcasing these implemented functionalities, the project aims to contribute to discussions and advancements in building trustworthy and interoperable AI agent ecosystems.

Team ABC

Abstract: Benchmarks are essential tools for quantitatively tracking progress in AI. As AI agents become increasingly capable of solving

complex, real-world tasks, researchers and practitioners have introduced agentic benchmarks that evaluate agents based on task outcomes. However, we show that using outcome-based design suboptimally can misrepresent the true capabilities of agents. For example, SWE-bench-Verified uses insufficient test cases, while τ-bench counts empty responses as successful. Such flaws can lead to under- or overestimation agents’ performance by up to 100% in relative terms. To address this issue, we introduce the Agentic Benchmark Checklist (ABC), a set of benchmark development guidelines that we synthesized from our benchmark-building experience, a survey of best practices, and previously reported issues.Team AgentSynth

Abstract: We introduce AgentSynth, a scalable and cost-efficient pipeline for automatically synthesizing high-quality tasks and

trajectory datasets for generalist computer-use agents. Leveraging information asymmetry, AgentSynth constructs subtasks that are simple during generation but significantly more challenging when composed into long-horizon tasks, enabling the creation of over 6,000 diverse and realistic tasks. Our pipeline begins with an LLM-based task proposer guided by a persona, followed by an execution agent that completes the task and logs the trajectory. This process is repeated iteratively to form a sequence of subtasks, which are then summarized by a separate agent into a composite task of controllable difficulty. A key strength of AgentSynth is its ability to precisely modulate task complexity by varying the number of subtasks. Empirical evaluations show that state-of-the-art LLM agents suffer a steep performance drop, from 18% success at difficulty level 1 to just 4% at level 6, highlighting the benchmark's difficulty and discriminative power. Moreover, our pipeline achieves a low average cost of $0.60 per trajectory, orders of magnitude cheaper than human annotations.Team Online Prompt-to-Leaderboard

Abstract: The evaluation of large language models (LLMs) traditionally relies on static leaderboards, which cannot capture prompt-

specific performance variations. Prompt-to-Leaderboard (P2L) addresses this limitation by creating prompt-specific rankings through a technique called “prompt-to-regression”. P2L itself unlocks granular per-prompt evaluations, cost-optimal routing between LLMs, and automatic strength/weakness detection. However, P2L has a key limitation that prevents real-world deployability: P2L remains constrained by its fixed architecture, which cannot accommodate newly released models without complete retraining. Furthermore, P2L is trained on over 2 million rows of human preference data, with the dataset size constantly increasing with time. Therefore, re-training P2L to update the model is prohibitively expensive, and increasingly so. Unfortunately, naively training P2L with chronologically ordered data causes catastrophic forgetting due to temporal distribution shift, making this strategy ineffective.Therefore, we introduce Online P2L (OP2L), which enables continuous learning of human preferences through two strategic buffering approaches, Replay Buffer and our novel Geometric Buffer, that selectively manage training examples based on model familiarity, thus preventing catastrophic forgetting while preserving evaluation accuracy as new models enter the ecosystem. Importantly, both these algorithms only modify the training data as it is streamed in, and require no complex architecture or learning algorithm changes. We perform hyperparameter ablations on both the Replay Buffer and Geometric Buffer to explore the resulting model quality. We find both strategies perform significantly better than the chronological baseline, nearly matching oracle performance. With the replay buffer, we decrease the loss delta by 65.1% between the OP2L model and the oracle fully re-trained P2L model compared to naive chronological training. Even better, with our novel Geometric Buffer, we decrease the loss delta by 78.1%.

With Online P2L, we can realize much of the potential of the P2L framework. With our OP2L, we can continuously update the OP2L with new incoming data and new LLMs. In theory, this means we can: 1. Continuously provide granular prompt-level model leaderboards even with newly deployed models. 2. Hold the highest performance via a continuously updated router. 3. Continuous, granular, regression tracking of new model deployments. Therefore, OP2L and the techniques presented in this paper are the first step towards continuous, up-to-date, fine-grained LLM evaluations that allow safe and auditable model deployments. Moreover, our approach devises a generalizable framework that can be applied beyond P2L, such as in recommendation systems, alignment pipelines, or reward model training.

Team GNAIX

Abstract: This study proposes an AI agent-based autonomous manufacturing system that integrates LLMs with Asset Administration Shell

(AAS) digital twin representations to enable flexible, rapidly reconfigurable production systems.The methodology uses LLMs to automatically generate Model Context Protocol (MCP) tools from AAS data, demonstrated through autonomous AFPM motor core manufacturing where AI agents interpret natural language commands and control PLC equipment.

Key contributions include empirical validation using 4,500+ AAS instances for LLM-to-MCP tool conversion and successful deployment of complete agent-driven manufacturing cycles, confirming feasibility for autonomous production coordination.

Team AIOS

Abstract: LLM-based intelligent agents face significant deployment challenges, particularly related to resource management. Allowing

unrestricted access to LLM or tool resources can lead to inefficient or even potentially harmful resource allocation and utilization for agents. Furthermore, the absence of proper scheduling and resource management mechanisms in current agent designs hinders concurrent processing and limits overall system efficiency.To address these challenges, this paper proposes the architecture of AIOS (LLM-based AI Agent Operating System) under the context of managing LLM-based agents. It introduces a novel architecture for serving LLM-based agents by isolating resources and LLM-specific services from agent applications into an AIOS kernel. This AIOS kernel provides fundamental services (e.g., scheduling, context management, memory management, storage management, access control) for runtime agents. To enhance usability, AIOS also includes an AIOS SDK, a comprehensive suite of APIs designed for utilizing functionalities provided by the AIOS kernel. Experimental results demonstrate that using AIOS can achieve up to 2.1 times faster execution for serving agents built by various agent frameworks.

Team EvoGit

Abstract: We introduce EvoGit, a decentralized multi-agent framework for collaborative software development driven by

autonomous code evolution. EvoGit deploys a population of independent coding agents, each proposing edits to a shared codebase without centralized coordination, explicit message passing, or shared memory.Instead, all coordination emerges through a Git-based phylogenetic graph that tracks the full version lineage and enables agents to asynchronously read from and write to the evolving code repository.

Experiments demonstrate EvoGit’s ability to autonomously produce functional and modular software artifacts across two real-world tasks: (1) building a web application from scratch using modern frameworks, and (2) constructing a meta-level system that evolves its own language-model-guided solver for the bin-packing optimization problem.

Our results underscore EvoGit’s potential to establish a new paradigm for decentralized, automated, and continual software development.

Team SocialAgent

Abstract: Understanding the intrinsic mechanisms of social platforms is an urgent demand to maintain social stability, The rise of

large language models provides significant potential for social network simulations to capture attitude dynamics and reproduce collective behaviors. However, existing studies mainly focus on scaling up agent populations, neglecting the dynamic evolution of social relationships. To address this gap, we introduce DynamiX, a novel large-scale social network simulator dedicated to dynamic social network modeling. DynamiX uses a dynamic hierarchy module for selecting core agents with key characteristics at each timestep, enabling accurate alignment of real-world adaptive switching of user roles. Furthermore.we design distinct dynamic social relationship modeling strategies for different user types. For opinion leaders, we propose an information-stream-based link prediction method recommending potential users with similar stances, simulating homogeneous connections, and autonomous behavior decisions. For ordinary users.we construct an inequality-oriented behavior decision-making module, effectively addressing unequal social interactions and capturing the patterns of relationship adjustments driven by multi-dimensional factors. Experimental results demonstrate that DynamiX exhibits marked improvements in attitude evolution simulation and collective behavior analysis compared to static networks. Besides, DynamiX opens a new theoretical perspective on follower growth prediction, providing empirical evidence for opinion leaders cultivation and social platform research.Team Training-Free Routing

Abstract: Increasing demand for Large Language Models (LLMs) services imposes substantial deployment and computation costs on

providers. LLM routing offers a cost-efficient solution by directing queries to the optimal LLM based on model and query features. However, existing works primarily focus on offline scenarios and struggle to adapt to online settings with high query volume and constrained token budgets. In this work, we introduce the first training-free algorithm for online routing scenarios. Our algorithm leverages approximate nearest neighbor search to efficiently estimate the features of queries and performs a one-time optimization over a small set of initial queries to learn a set of routing weights that guide future routing. We provide a theoretical guarantee that the algorithm achieves a competitive ratio of 1-o(1) under natural assumptions, which is further validated by extensive experiments across 3 benchmark datasets and 8 baselines, showing an average improvement of 3.55x in performance, 1.85x in cost efficiency, and nearly 4.25x in throughput.TIMELINE

| Date | Event |

|---|---|

| 3/13 - 3/30 | AgentX: Registration and Team Formation |

| 3/31 - 5/31 | Build Office hours w. Partners & Sponsors Midpoint check-in for feedback |

| 5/31 11:59pm PT | Submission Deadline for AgentX |

| June | Judging |

| 8/2 | Agentic AI Summit Demo Day & Awards at Agentic AI Summit |

SUBMISSION GUIDELINES

🚀 Entrepreneurship Track

Develop startup ideas (including MVP) leveraging LLM Agents, such as developer tools, enterprise software solutions, and consumer applications.

- Problem / Pain Point

- Clear, specific description

- Who currently suffers and why existing solutions fail

- Target Audience & Market Size

- TAM / SAM / SOM estimates

- Personas or customer segments

- Evidence of Demand

- Survey results, wait-list numbers, pilot data, market reports (cite sources)

- Solution Overview

- How the LLM agent solves the pain point

- Live Demo Screenshot (hyperlink to full video)

- Technical Architecture (high-level)

- Model usage, agent loop, data flow, infra, etc.

- Competitive Landscape & Differentiation

- Go-to-Market & Growth Strategy

- Revenue Model & Unit Economics

- Roadmap

- Major milestones: alpha, beta, v1.0, scaling

- Team

- Names, roles, 1-sentence bio, LinkedIn/GitHub

- Asks (e.g. Funding, partnerships, hiring, advisors, etc.)

- Appendix slides may include detailed architecture diagrams, or additional metrics, etc.

- Put the video on youtube. Make sure it is unlisted/public so that it is accessible to the judges

- Screen-capture or live camera acceptable

- Show real workflow end-to-end; avoid PowerPoint narrations

- Voice-over or captions required for accessibility

- No heavy post-production—judges want authenticity

- Provide a URL that any judge can access

- Accepted options:

- Web app / mobile TestFlight, etc.

- Hugging Face Space, Inference Endpoint, or similar

- GitHub repo with 1-click deploy (e.g., Codespaces)

- If your system involves novel algorithms, evaluations, or complex infra, bundle a short PDF (~5 pages) covering:

- Model/agent architecture diagram

- Proprietary vs. open-source components

- Dataset sources & licenses

- Safety / alignment measures

- Benchmark results (if any) with methodology

Pitch Deck (≤20 slides, excluding appendix)

Product-Demo Video (max 3 min)

Live Product Link

Technical Appendix (optional)

- Market Need & User Impact

- Clear definition of problem/pain-point and target audience

- Evidence of demand (e.g., surveys, market analysis, wait-lists)

- Technical Execution

- Robustness of the working prototype

- Quality of LLM-agent integration

- Feasibility of scaling from MVP to full product

- Scalability & Business Model

- Strategic plan (timelines, milestones)

- Path to monetization and a self-sustaining business

- Pitch Quality

- Clarity and persuasiveness of narrative

- Cohesive story linking problem, solution, and market opportunity

- Ability to inspire investor confidence

🔬 Research Track

Explore the frontiers of LLM Agents technology. Potential research topics include but are not limited to Agent Architecture, AI Safety & Alignment, Reasoning & Planning, Benchmarks & Evaluation, Fundamental Capabilities, and Multi-agent & Decentralization.

- Upload a scientific manuscript (PDF) with an abstract, introduction, methods, results, and discussion (or equivalents) that describes what you’ve done, how you’ve done it, and why it matters.

- Maximum 7-8 pages

- INCLUDING tables and figures

- NOT INCLUDING references or appendices

- Examples of papers are here: https://llmagents.github.io/#accepted-papers (can be found on Google scholar)

- Additional guidelines:

- If you are making an application, include a brief document explaining how users can interact with your application.

- If you are making a benchmark, include clear guidelines on using the benchmark, adding new agents, and interpreting results, along with any baseline results for reference.

- If you are making a research contribution, include your approach, validation methods, and performance improvements over baselines

- Briefly outline the problem, your solution, technical implementation, and potential impact. You can also include a research demo (optional).

- Put the video on youtube. Make sure it is unlisted/public so that it is accessible to the judges

- Share a GitHub link containing your project code, data, or resources, along with a README explaining how to run or use your project.

- Set the repository public so that it is accessible to any judges

Scientific Paper (7-8 pages, excluding appendix)

Video Presentation (max 3 min)

Github Repository

- Technical Innovation

- Novelty of architectural designs, algorithms, or frameworks

- Improvements in reasoning, safety, fundamental capabilities, etc.

- Methodological Rigor

- Soundness of experimental design and reproducibility

- Clarity in benchmarking against existing approaches

- Impact Potential

- Long-term implications for LLM agent research or applications

- Scalability of the solution

- Clarity & Presentation

- Coherence of the research paper and demo

- Cohesive story linking problem, solution, and market opportunity

- Ability to convey complex ideas in an accessible manner