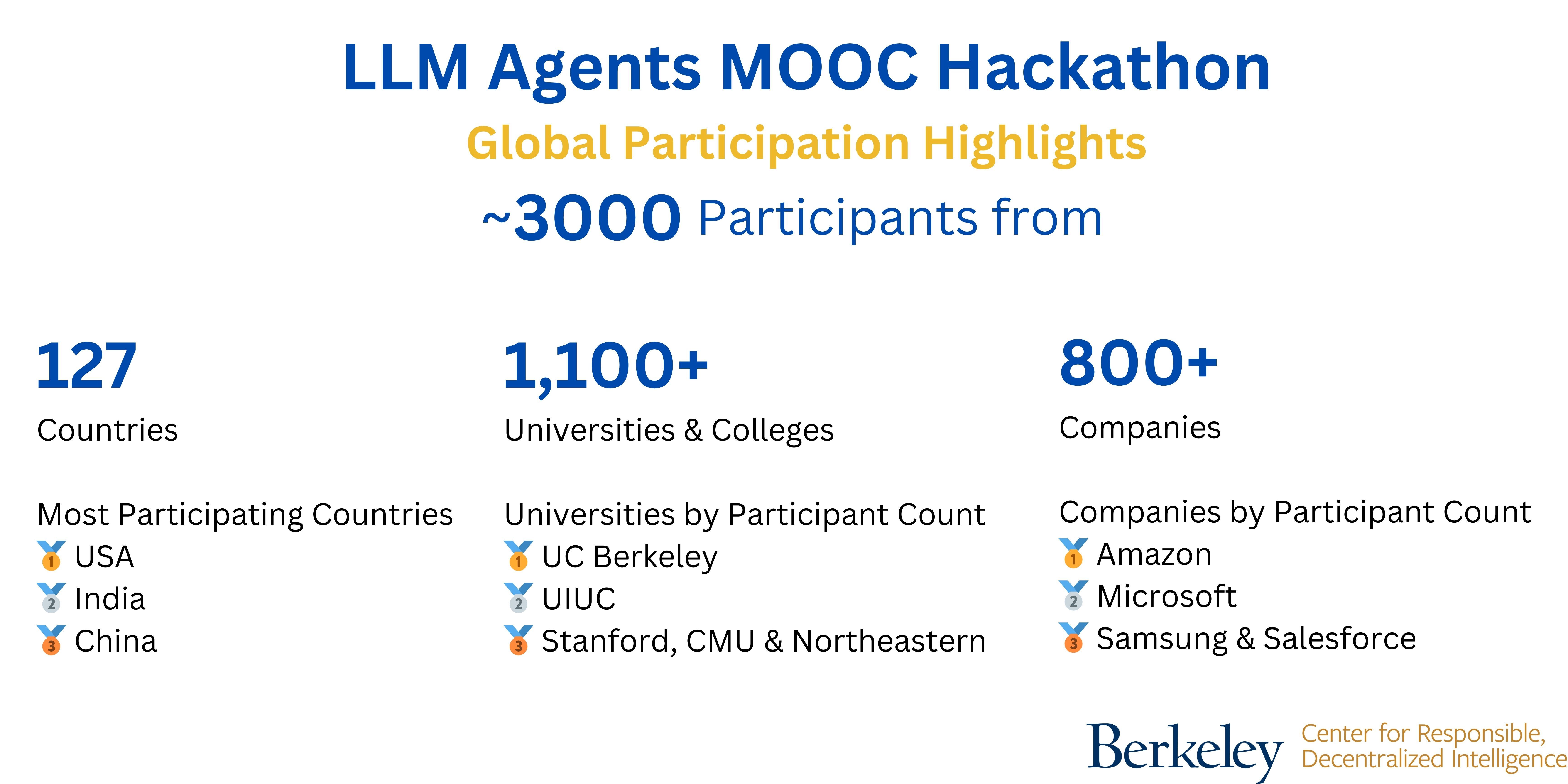

This LLM Agents Hackathon, hosted by Berkeley RDI and in conjunction with the LLM Agents MOOC, aims to bring together students, researchers, and practitioners to build and showcase innovative work in LLM agents, grow the AI agent community, and advance LLM agent technology. It is open to the public and will be held both virtually and in-person at UC Berkeley.

Our hackathon has successfully concluded. Thank you for participating. Join our Spring 2025 Advanced Large Language Model Agents MOOC today!

The hackathon is designed to have 5 tracks:

- Applications Track: Building innovative LLM agent applications across diverse domains, from coding assistants to personal AI companions.

- Benchmarks Track: Creating and improving benchmarks for AI agents, enabling standardized evaluation and comparison of different agent architectures and capabilities.

- Fundamentals Track: Enhancing core agent capabilities such as memory, planning, reasoning, and tool use through novel frameworks and techniques.

- Safety Track: Addressing critical safety concerns in AI agent deployment, including misuse prevention, privacy, interpretability, and broader societal impacts.

- Decentralized and Multi-Agents Track: Advancing tools, frameworks, and applications for decentralized multi-agent systems, focusing on enhanced capabilities, interactions, and deployment.

We hope this hackathon with these specially-designed tracks can help demonstrate that we are entering a new phase of maturity and practicality of LLM agent technology where:

- Every developer can learn to use LLM agent technology for building innovative applications (Applications Track)

- Decentralized community collaboration can effectively bring the community together to build key technologies and infrastructure for LLM agents, serving as important foundations and public good for the community in AI (Benchmarks, Fundamentals, Safety, and Multi-Agent Tracks)

PRIZES & RESOURCES

⭐ More than $200k in prizes and resources! With more to be announced...

PRIZES 3 Winners! 1st, 2nd and 3rd place - $25k, $10k, and $5k in OpenAI credits

RESOURCES Access & credits are available for hackathon teams

⧉ Learn More Here

PRIZES Winners receive prizes totaling $25k in Google Cloud credits

RESOURCES Access & credits are available for hackathon teams

⧉ Learn More Here

PRIZES Winners will be selected for a total of $15,000 in gift cards for the Applications track.

RESOURCES Learn more and check their openings.

⧉ Learn More Here

PRIZES Winners selected across all 5 tracks for prizes totaling $4.5k in λ credits

RESOURCES Llama API endpoint throughout the hackathon and GPU compute credits

⧉ Learn More Here

PRIZES Winners receives prizes totaling up to $32k in Intel Tiber Cloud Credits

RESOURCES Compute resources, including CPU and GPU.

⧉ Learn More Here

PRIZES Winners will be selected for up to $10,000 in gift cards for the Safety track.

RESOURCES Learn more about their work on AI Safety

⧉ Learn More Here

PRIZES Up to $20,000 in cash for winners of the Safety track! (Up to $10k/$6.5k/$3.5k for 1st/2nd/3rd place).

RESOURCES Learn more about their grants.

⧉ Learn More Here

PRIZES Up to $6,000 in AWS cloud credits for winners.

RESOURCES Learn more and check out their openings, especially PhD internships in GenAI/LLMs.

⧉ Learn More Here

RESOURCES Learn more, watch for an info session, and check out their openings.

⧉ Learn More Here

RESOURCES Learn more and check out their openings.

⧉ Learn More Here

RESOURCES Learn more and check out their openings.

⧉ Learn More Here

SPECIAL RAFFLE

All participants eligible. Raffle winners receive travel grants for the LLM Agents Summit in August 2025 in Berkeley, CA.

SHOWCASE OPPORTUNITY Hackathon winners will be invited to showcase their projects at the Summit.

Hackathon Winners

Team Eyecognito

Abstract: The U.S. moving industry, valued at $23+ billion annually, presents significant challenges for

consumers due to its fragmented structure, high costs, and logistical complexity. To address this, we created Smooth Operator, an innovative AI-driven solution designed to simplify the moving process. Smooth Operator automates key aspects of the moving experience, including mover selection, cost negotiation and decision-making. By leveraging a multi-agent AI workflow, it minimizes the time, effort, and financial burden of moving, while ensuring users receive optimal value from their selected service providers. Key Features:- Interactive Data Collection: An intelligent agent designed to seamlessly gather detailed information from users, ensuring precise data capture for tailored solutions.

- Dynamic Strategizing: A strategy-driven agent capable of adapting its approach in real-time, leveraging insights from previous conversations to refine and optimize strategies.

- Voice Call Integration: Advanced agents that can initiate voice calls, analyze conversations, and extract actionable insights to drive decision-making.

- Personalized Recommendation: Agents equipped to process complex scenarios and provide users with the most suitable mover options, enhancing efficiency and satisfaction.

Report Demo Code Slides Website

Team Fun Fact

Not only did the project use AI, even the demo video and presentation were AI assisted generated. We used Gen AI to generate a compelling script and narrative as well as used Eleven labs AI voice. It also suggested relevant B-roll footage to capture audience attention.Team ThreadFinders

Abstract: This project seeks to address one of society's most pressing and emotional challenges: reuniting

missing persons with their loved ones. Born from the heartbreaking stories of families torn apart by disappearances, it harnesses the power of Generative AI and Google Cloud Platform (GCP) to create a collaborative and technology-driven solution. By centralizing updates and search efforts, the platform fosters a unified approach where families, volunteers, and organizations can coordinate, communicate, and take actionable steps toward locating missing individuals. The solution not only amplifies the collective impact of these efforts but also provides families with hope and much-needed clarity during critical times.At the heart of this project are specialized Generative AI agents, each contributing essential functionalities to enhance the search process. The Search Area Agent identifies key updates and visually maps progress using interactive Google Maps, while the Event Recognition Agent detects and categorizes events, pinning approximate coordinates for reference. Supporting these efforts, the Update Tracker Agent uses AI to analyze multimodal inputs, such as images and updates, and records valuable information into the centralized Google Cloud Storage. Complementing this, the Media Monitoring & Grounding Agent continuously tracks news updates via APIs, grounding the latest information for relevance and storing it for future use. Finally, the News Analyzer Agent interprets media news, offering actionable advice to optimize search strategies while maintaining safety protocols.

By combining these agents into a cohesive ecosystem, the project delivers a comprehensive solution that blends technology and human empathy. Whether through the visualization of search zones, strategic recommendations, or real-time updates, it ensures every piece of information is leveraged to its fullest potential. This integrated approach empowers communities and families to collaborate effectively, reaffirming the belief that no search is in vain and that technology can play a pivotal role in reuniting loved ones.

Team Fun Fact

We’ve been best friends for six years but only met in person five times—all at conferences—because we’re always living in different places.Team 433ventures

Abstract: US financial companies are spending more money than ever to attract new customers—costs are at an

all-time high. For example, US insurance companies pay up to $54.91 each time someone clicks on their search ads (according to the O&E Insurance Observatory). Over 75.7% of customers start but don’t finish filling out application forms (Formstory Blog).We're introducing a new, simpler way for users to complete online applications. Our platform uses an AI agent and the OpenAI Realtime API to add an option on financial websites: ""I want an AI to call me and help complete my application."" When users select this, the AI calls them to gather the needed information over the phone, making the process easy and personal.

After the call, the AI fills out the application form and sends it to the user to review and confirm. If the call is interrupted, the AI continues the conversation through email or messaging apps until the application is finished or the user decides not to proceed (without spamming them). This new type of user experience makes it easier for customers to complete applications, reducing the number of people who abandon forms.

Report Demo Code Slides Website

Team Fun Fact

Our project started as a simple technical experiment to test the limits of LLMs—particularly their tool-calling capabilities—just for fun. But before we knew it, we accidentally tripped into full-blown startup mode. One moment, we were running tests; the next, we were drafting pitch decks and discussing equity splits. Oops!Team TerminAI

Abstract: TerminAI is a powerful terminal that demonstrates the working of multi-model architectures. With

TerminAI users can directly type in plain language what they want and have that be executed. TerminAI exhibits duality, that is, if the user enters a bash command directly, then that is immediately executed without the delays introduced by the models.It is designed to help novice users familiarise themselves with the terminal, while ensuring that nothing they do, harms the system in a big way. It also helps experienced users by streamlining there usage of the terminal and allowing them to achieve more.

TerminAI is a complete LAM framework based on python. It can execute prompts entered by the user, leveraging Gemini to do the prompt analysis. Its elegant and looks beautiful too (whitish grey!)

Team Fun Fact

I worked solo on this project, but it wouldn’t have started without my friend. While I was explaining my idea to him, he pulled up this hackathon and said, ‘If you’re doing it, why not submit it here?’ Though he was leading another competition and couldn’t code with me, he had a key insight that pushed us to pivot midway. That led to Version 2, and I spent weeks refining and building it. It’s been an intense but rewarding journey, and I’m glad to see it all come together.Team StoryLabs

Abstract: StoryLabs is a full-stack web app leveraging multimodal AI to engage and grow with young readers

through stories. For busy parents, finding engaging, personalised reading material for children is challenging. Traditional books fail to adapt to each child’s interests, reading level, and rapidly evolving abilities, leaving parents struggling to keep up. StoryLabs addresses this gap by transforming the reading experience into an interactive, personalised journey.StoryLabs leverages AI to create tailored stories that match a child’s unique needs. With dynamic illustrations, captivating voiceovers, and adaptive storytelling, it brings tales to life. We even include the child in the story! Designed to grow with the child, StoryLabs ensures an engaging and scalable reading experience.

StoryLabs empowers children to become curious explorers while providing parents with a tool that generates resources personalised for their child. By fostering a love for reading through personalised, magical adventures, StoryLabs is reimagining how children learn and grow with technology.

Team Fun Fact

Yeo Cheng Yong and Chen Enjiao (Ernie) are engineers building data-driven applications for the newsroom at Tech in Asia in Singapore. Sparked by Cheng Yong’s quest to support her child’s reading challenges and Ernie’s experience in AI storytelling, they created StoryLabs — an app that crafts personalized stories with rich media to give kids a unique reading experience.Team Noon Bekesh

Abstract: The hAIre project revolutionizes the HR recruitment process by leveraging advanced AI to

streamline interviews, improve candidate evaluations, and ensure data privacy. Designed to address inefficiencies in traditional hiring methods, hAIre integrates a multi-agent system with modular components, including automated CV screening, dynamic question generation, and real-time evaluation. Its user-friendly HR dashboard allows customizable interview setups, enabling job-specific configurations and multilingual support. With robust anonymization and GDPR compliance, it protects sensitive data while enhancing inclusivity.Key innovations include AI-driven adaptive interviews, a detailed reporting module for structured feedback, and scalable architecture for real-time decision-making. The platform supports comprehensive candidate assessments through speech-to-text (STT), text-to-speech (TTS), and large language model (LLM) integration. Future directions involve facial and audio analysis for emotional insights, an HR analytics dashboard for data-driven hiring strategies, and human-in-the-loop systems for nuanced decision-making. By automating routine tasks and ensuring consistency, hAIre empowers HR teams to focus on strategic talent acquisition, setting a new standard in AI-enhanced recruitment.

Team Fun Fact

Our team, Noon Bekesh, is named after the fine art of sopping up every last bit of food with bread in Persian, similar to scarpetta in Italy. Not for the environment, though… Financially, we’re one step away from eating the pan itself.Team Recall

Abstract: Recall is an agentic system for interactive videos. Our project’s goal is to build a video system capable

of supporting chat style interactions with the user. Our narrow focus is on presentation style videos, such as in a conference, workshops or in MOOCs like this LLM Agents Berkeley Course. The project aims to allow users to interact with videos through a chat-like interface, where the system responds with video snippets from the source video and can handle factual questions, summarization, and explanation-style interactions. This system has several potential applications, such as an AI-based teaching assistant for MOOCs, engaging user experiences with long videos like presentations or enterprise courses, and building an engaging video-modality-based AI tutor.The overall system architecture involves users interacting with the system through a multimodal content input, which goes through a (i) video and document ingestion pipeline to index the video, create a knowledge base and store the indexed content in it and then (ii) the inference pipeline that uses and processes the recall knowledge base to interact with the user. Both components use an Agentic design as multiple calls are made to OpenAI LLMs using carefully RAG-crafted prompts. We use Langfuse for tracing, data collecting and eval measurement. The ingestion pipeline handles long videos from 1 to 8 hours in duration, as well as related online content, such as PDFs or text documents. The inference pipeline handles user queries, including real-time voice interaction. The tech stack includes a combination of Streamlit/Replit for the frontend, Whisper/CLIP/GPT4o/OpenAI-embedding for LLMs, QdrantDb for vector database, and OpenAI Realtime API for voice interaction.

Video Ingestion Pipeline: The content ingestion pipeline processes documents by chunking and sharding, then uses an embedding model to create document embeddings and stores them. The video is also transcribed, and the transcript is chunked, embedded, and stored. Audio transcription with timestamps is accomplished using the Whisper model, which can be extended to multilingual support, and these timestamps are used as an index to match against video segments. Video content is processed using a keyframe image sequencer, which converts video into significant frames using a CLIP-like model and extracts text, then embeds and stores them. The keyframe video sequencer allows for some experimentation with different implementations of keyframe-making algorithms. Related textual documents are parsed using text/pdf document parser/chunking. The knowledge base stores vector embeddings for images, textual documents, transcriptions, original video content, and related metadata for snippet generation.

Recall Inference Pipeline: The inference pipeline begins with a user query that is processed through a query planner using an LLM agent, converted to embeddings, and then a retriever. Retrieved documents are then used to generate a textual response using an LLM agent as well as the corresponding video snippets. The aggregated response is then returned to the user. The video inference pipeline includes a query planner that converts user input into one or more queries, context creation using RAG-style retrieval of textual content and related images, LLM-based response generation given context, LLM-based snippet timestamp generation by matching response to snippet timestamps, snippet output generation and a video player with a websocket control channel, and integration with OpenAI Realtime API for voice-based user experience.

Team Youth-AI-Safety

Abstract: Youth-AI-Safety @ UIUC SALT Lab. Recent advances in Large Language Model (LLM) research have

powered the rapid growth of Generative AI chatbots, offering human-like conversational experiences. As these applications gain popularity among young users, critical safety challenges emerge—ranging from boundary violations to harmful content generation—that traditional moderation systems struggle to address. To tackle these issues, we conducted an in-depth analysis of real-world youth-AI interactions, identifying unique risks specific to this context. Based on our findings, we developed YouthSafeAgent, a risk taxonomy capturing a wide spectrum of safety concerns, and designed a parental control system that leverages LLM-based risk detection. This work represents a pioneering step toward enhancing youth safety in AI-driven environments and provides a foundation for future research, policy development, and industry practices in youth-AI safety.Team Fun Fact

Our team, Youth-AI-Safety @ UIUC SALT Lab, has an unexpected 'Y' connection—every team member’s first name starts with the letter 'Y,' and so does our project name! It wasn’t planned, but this ‘Y’ coincidence feels like a perfect fit, symbolizing our shared focus on youth safety in the evolving world of AI.Team Guanabara AI

Abstract: Our project aims to reduce the cognitive burden faced by professional traders. By developing a

multi-agent framework called FinSage, we can help traders analyze complex market information by efficiently filtering through noise to deliver the most relevant and accurate data within minutes. Our approach seeks to enable traders to quickly uncover actionable insights for informed trading decisions.FinSage consists of six specialized agents, each with a distinct role:

- Supervisor Agent: Orchestrates overall operations and manages which agents to activate for each query.

- Financial Metrics Agent: Provides comprehensive technical metrics for analyzing a company's financial health and stock performance.

- News Sentiment Agent: Analyzes sentiment across company news and specific market sectors.

- SQL Agent: Accesses a historical database containing data from 2009 to 2022 for 57 NYSE-listed companies.

- Synthesizer Agent: Combines data collected by other technical agents to generate concise, actionable answers to user queries.

Users can personalize their experience by entering their trading profile. FinSage then tailors its responses and recommendations based on the user's risk tolerance, investment style, and expected return time horizon. The application is intuitive—users simply input their trading profile and a specific question like, Should I buy META stocks today?" and FinSage will come with a response.

Team Fun Fact

The team came together through shared passion and collaboration on the Kaggle platform. Some members were once mentored by the team leaders, while others were long-time hackathon partners. Their combined experience, teamwork, and camaraderie ultimately led them to win this exciting challenge!Team TBD

Abstract: Tabular data, prevalent in fields like medicine, finance, and science, presents unique challenges

due to its structural complexity and heterogeneous nature. While Large Language Models (LLMs) such as GPT-4o and LLaMA excel in unstructured data tasks, they face limitations in handling the structured, multidimensional nature of tabular data and performing complex reasoning. This paper introduces DataSense, a comprehensive benchmark designed to evaluate LLMs' capabilities in processing tabular data across multiple domains and task types.DataSense distinguishes itself from existing benchmarks by focusing on multi-domain data analytics and synthesizing diverse questions across business intelligence, CRM, demographics data, and research data. The benchmark comprises 230 questions across 13 tables from 9 different datasets, evaluating three key areas: data curation, information retrieval, and statistical analysis. Questions are categorized into three difficulty levels and designed to test various aspects of tabular data processing, from simple value retrieval to complex multi-hop reasoning tasks.

The study evaluated four prominent LLMs (Claude 3.5 Sonnet, GPT-4o, Gemini-1.5 Pro, and GPT-3.5 Turbo) using the LangChain framework. Results showed that Claude 3.5 Sonnet performed best overall with 68.3% accuracy, followed by GPT-4o (58.3%) and Gemini-1.5 Pro (55.2%), while GPT-3.5 Turbo achieved 37.4%. The research revealed that while LLMs excel at data curation tasks (with accuracies ranging from 72.2% to 86.1%), they struggle significantly with multi-step reasoning and multi-table operations, particularly in domain-specific contexts like CRM data analysis.

These findings highlight both the potential and limitations of current LLMs in handling tabular data tasks. While they show promise for automating data curation and simple analysis tasks, there remains significant room for improvement in complex reasoning scenarios and multi-table operations. This benchmark provides a valuable tool for assessing and comparing LLM capabilities in tabular data processing, while also identifying specific areas requiring further development.

Team Fun Fact

A fun fact about our team involves the team name TBD (to be declared), which later turned out to be perfectly fitting and now stands for team benchmark data.Team iVISPAR

Abstract: Large Vision-Language Models (LVLMs) are known to face challenges with spatial reasoning and

visual alignement. iVISPAR addresses this limitation by providing an interactive benchmark designed to evaluate the spatial and visual-spatial reasoning capabilities of LVLMs as agents. The benchmark focuses on a selected scenario: the sliding tile puzzle, a classic problem that requires logical planning, spatial awareness, and multi-step problem-solving.Team LLMS are Good Good

Abstract: Vision and Language Navigation (VLN) in real-world environments has long been a core

challenge in AI research. While previous studies have made strides in developing intelligent agents, many of these efforts have been limited by overly controlled or synthetic environments. To overcome the limitations, this paper introduces a novel benchmark aimed at pushing the capabilities of language agents in real-world vision, reasoning, and decision-making. Drawing inspiration from the Scavenger Hunt game at UC Berkeley, we design a series of experiments where agents navigate complex urban street environments to accomplish specified tasks. Our benchmark not only evaluates an agent’s ability to perceive and understand its surroundings but also measures its performance in questioning, action reasoning, and efficiency.This benchmark provides a robust foundation for advancing future research in real-world Vision and Language Navigation.

Team o1-maximum

Abstract: Large Language Models (LLMs) excel in reasoning and in-context learning but often struggle in

real-world scenarios where tasks are ambiguous or under-specified. Fine-tuning such models for specific environments is impractical due to computational and data constraints. To address these limitations, we introduce Self-Supervised Explorative Agent Learning (SSEAL), a novel framework that enables black-box agents to autonomously adapt to their environments. SSEAL systematically enhances task performance by leveraging self-supervised exploration to optimize input prompts, resolving ambiguities before task execution.SSEAL operates in two phases: exploration and execution. During the one-time exploration phase, the agent interacts with the environment to clarify its structure and generate insights. This understanding is then used to create optimized prompts containing clarified task instructions, updated environmental context, and/or few-shot examples derived from the exploration. In the execution phase, the optimized prompt guides task performance, ensuring accuracy and reducing ambiguity. Notably, SSEAL treats the model as a black box, requiring no changes to its internal parameters, making it a lightweight and adaptable solution.

We demonstrate SSEAL's effectiveness through extensive experiments across diverse tasks, including function calling, model hierarchies, robotics, and software engineering. In function-calling tasks, SSEAL improves accuracy from 5% to over 80% in challenging benchmarks like NexusBench and achieves significant gains in real-world-inspired environments such as LinuxTerminal. In model hierarchies, SSEAL transfers exploration findings from stronger to weaker models, enabling smaller models to achieve comparable performance at reduced costs. In robotics, SSEAL enhances task success rates by 9%, and in software engineering, it improves tool-calling accuracy for development agents. These results showcase SSEAL's potential as a computationally efficient framework for long-term, high-performance deployments.

Team TARS

Abstract: TARS: Team for Advanced Reasoning Systems. LLM-based agents have the potential for immense

impact across various applications. However, existing work typically develops architectures for specific environments, which falls short of commercial scalability, offers no clear path towards general intelligence, and suffers from the fundamental limitation of autoregressive LLMs. On the other hand, humans are generalists who achieve goals in diverse environments with a single cognitive architecture. Furthermore, human reasoning is not just linear, autoregressive reasoning, but also include forward-looking, simulation-based reasoning using an internal world model.Inspired by these insights, we develop AgentModel, a general architecture for optimal goal-oriented agents. AgentModel formulates decision-making as maximizing the probability of achieving goals over latent representations of agent trajectories, which involves proposing plans, simulating the outcomes using a world model, and evaluating goal achievement. Combined with additional modules such as perception, memory, and acting, AgentModel can flexibly plan in a wide range of environments using the latent representation space of natural language, while overcoming the limitation of autoregressive LLM reasoning by fully exploring each option and correcting errors.

In extensive experiments on web browsing tasks, our proposed architecture improves the success of flight search from 0% to 32.2%, while world model planning shows consistent advantage of up to 124% over autoregressive planning, which demonstrates the advantage of world model simulation as a reasoning paradigm.

Report Demo Code Slides Website

Team Fun Fact

Both of the team members love natto but neither are Japanese ;)Team Cognitive LLM Agents

Abstract: Existing multi-agent approaches (ReAct, AutoGen) often rely on multiple task-specific agents

who coordinate through structured roles and predefined communication patterns. This allows them to generate reasoning traces and task-specific actions in a sequential manner, allowing them to solve interactive tasks more effectively than imitation and reinforcement learning. However, this approach limits their generalization to diverse environments and types of problems. We aim to design a system of specialized LLM agents that collaborate based on core cognitive functions such as memory, summarizer, observer, evaluator, and learner. We believe human intelligence emerges from the communication of information between multiple specialized subsystems responsible for different cognitive processes, and our goal is to mimic this with a multiagent system. We apply our approach, named SallCo, on the ALFWorld dataset. We demonstrate the effectiveness of our approach by showing that it receives superior results to the baseline AutoGen approach, using only the assistant, environment proxy, grounding and execution agents.Team ForesAIght

Abstract: Recent studies have discovered that LLMs have serious privacy leakage concerns, where an

LLM may be “fooled” into outputting private information under carefully crafted adversarial prompts. These risks include leaking system prompts, personally identifiable information, training data, and model parameters. Most existing red-teaming approaches for privacyleakage rely on humans to craft the adversarial prompts. A few automated methods are proposed for system prompt extraction, but they cannot be applied to more severe risks (e.g., trainingdata extraction) and have limited effectiveness even for systemprompt extraction.In this paper, we propose PrivAgent, a novel black-box red-teaming framework for LLM privacy leakage. We formulate different risks as a search problem with a unified attack goal. Our framework trains an open-source LLM through reinforcement learning as the attack agent to generate adversarial prompts for different target models under different risks. We propose a novel reward function to provide effective and fine-grained rewards for the attack agent. We also design novel mechanisms to balance exploration and exploitation during learning and enhance the diversity of adversarial prompts. Finally, we introduce customizations to better fit our general framework to system prompt extraction and training data extraction. Through extensive evaluations, we first show that PrivAgent outperforms existing automated methods in system prompt leakage against six popular LLMs. Notably, our approach achieves a 100% success rate in extracting system prompts from real-world applications in OpenAI’s GPT Store.We also show PrivAgent’s effectiveness in extracting training data from an open-source LLM with a success rate of 5.9%.We further demonstrate PrivAgent’s effectiveness in evading the existing guardrail defense and its helpfulness in enablingbetter safety alignment. Finally, we validate our customized designs through a detailed ablation study. We release our codehere https://github.com/rucnyz/RedAgent.

Team AgentGuard

Abstract: In this project, we propose AGENTGUARD, an autonomous testing and hardening framework for LLM

agent systems through the LLM tool orchestrator. It achieves testing and hardening by leveraging the LLM tool orchestrator’s innate advantages in possessing internal knowledge of tools gained during the agent-building process, the ability to explore unsafe workflows in scalable manner, and the privilege of invoking tools. By evaluating AGENTGUARD with a coding agent, we found that LLMs appeared to have limited knowledge about specific tools (SELinux). Meanwhile, we observed successful evaluation results indicating the feasibility and effectiveness of the design and providing a proof of concept of AGENTGUARD.Team Hoosiers

Abstract: This project introduces a new threat called control flow hijacking, where malicious tool

providers manipulate descriptions and arguments to extract sensitive information and corrupt tool outputs. In this project, automated security scanner was developed to detect such threats within the two major agent development frameworks, LangChain and llama-index, uncovering that 41% of tested integrations are vulnerable to these attacks.Team Agent Lite

Abstract: Large Action Models (LAMs) have revolutionized intelligent automation, but their application in

healthcare faces challenges due to privacy concerns, latency, and dependency on internet access. This report introduces an on-device, multi-agent healthcare assistant that overcomes these limitations. The system utilizes smaller, task-specific agents to optimize resources, ensure scalability and high performance. Our proposed system acts as a one-stop solution for health care needs with features like appointment booking, health monitoring, medication reminders, and daily health reporting. Powered by the Qwen Code Instruct 2.5 7B model, the Planner and Caller Agents achieve an average RougeL score of 85.5 for planning and 96.5 for calling for our tasks while being lightweight for on-device deployment. This innovative approach combines the benefits of on-device systems with multi-agent architectures, paving the way for user-centric healthcare solutions.Team Fun Fact

Many of our ideas for the hackathon were brainstormed over steaming cups of chai!Team DAMCS

Abstract: Developing intelligent agents for long-term cooperation in dynamic open-world scenarios is a

major challenge in multi-agent systems. Traditional Multi-agent Reinforcement Learning (MARL) frameworks like centralized training decentralized execution (CTDE) struggle with scalability and flexibility. They require centralized long-term planning, which is difficult without custom reward functions, and face challenges in processing multi-modal data. CTDE approaches also assume fixed cooperation strategies, making them impractical in dynamic environments where agents need to adapt and plan independently.To address decentralized multi-agent cooperation, we propose Decentralized Adaptive Knowledge Graph Memory and Structured Communication System (DAMCS) in a novel Multi-agent Crafter environment. Our generative agents, powered by Large Language Models (LLMs), are more scalable than traditional MARL agents by leveraging external knowledge and language for long-term planning and reasoning.

Instead of fully sharing information from all past experiences, DAMCS introduces a multi-modal memory system organized as a hierarchical knowledge graph and a structured communication protocol to optimize agent cooperation. This allows agents to reason from past interactions and share relevant information efficiently. Experiments on novel multi-agent open-world tasks show that DAMCS outperforms LLM baselines in task efficiency and collaboration. Compared to single-agent scenarios, the two-agent scenario achieves the same goal with 63% fewer steps, and the six-agent scenario with 74% fewer steps, highlighting the importance of adaptive memory and structured communication in achieving long-term goals.

Report Demo Code Slides Website

Team Fun Fact

It was all about communication and teamwork among the DAMCS team to make DAMCS agents communicate and work together! We are consistently inspired by how we collaborate in real life.Team Trust Issues

Abstract: Decentralized multi-agent systems often lack robust mechanisms for assessing the trustworthiness and

credibility of individual agents when addressing different types of questions. In this work, we introduce a novel approach for tuning such systems, inspired by the multiplicative weights algorithm, to dynamically adjust the trust placed in each agent based on their past performance. Our method involves maintaining a knowledge vector for each agent, which, when compared to a given question, produces a reputation score reflecting the agent’s reliability for that particular question. By distributing these reputation signals to the agents before they collaborate on answering questions, we hypothesize that the system will collectively provide more accurate and reliable answers. This approach is especially promising for multi-agent systems where agents specialize in different domains, as it enhances interdisciplinary problem-solving by adjusting trust levels accordingly. Additionally, we propose that this method can help mitigate the influence of bad actors within the system, further improving its overall robustness and decision-making capabilities.HACKATHON TRACKS

Applications Track

Develop innovative LLM-based agents for various domains, including coding assistants, customer service, regulatory compliance, data science, AI scientists, and personal assistants. Focus on both hard-design problems (novel domain-specific tools) and soft-design problems (high-fidelity human simulations and improved AI agent interfaces).

Benchmarks Track

Create or improve AI agent benchmarks for novel tasks or extend existing ones. Focus on developing multi-modal or multi-agent benchmarks, improving evaluation methods, and creating more robust and efficient testing environments for AI agents.

Fundamentals Track

Enhance core agent capabilities in memory, planning, reasoning, tool-use, and multimodal interactions. Improve existing frameworks, design novel prompting schemes, and develop better methods for agents to interact with various tools and environments.

Safety Track

Address critical safety concerns in AI agent deployment, including preventing misuse, ensuring privacy, improving interpretability, and assessing broader societal impacts. Develop methods for better control, auditing, and accountability of AI agents in various applications and multi-agent systems.

Decentralized and Multi-Agents Track

Develop innovative tools, frameworks, and infrastructure to enhance multi-agent capabilities and decentralized deployment. Investigate how multiple agents interact with each other and how we can better leverage their capabilities. Design novel applications across domains, emphasizing decentralization benefits like robustness, scalability, and privacy.

TIMELINE

| Date | Event |

|---|---|

| October 21 | Begin Hackathon

Participant Sign Up Open (required) |

| October 28 | Team Sign Up Open (required) |

| November 20 | Mid-hackathon Progress Check-in DUE (optional) |

| November 25 |

Credits & API Access Sign Up DUE (optional)

Compute Resources Sign Up DUE (optional) |

| December 19 11:59pm PST |

Fill Out Project Submission Form DUE (required) |

| December 20 | Judging (12/20 9am - 1/7 11:59pm PST) |

| February 12 | Winners Announced |

HACKATHON PROGRAM SCHEDULE

| Date | Program Session |

|---|---|

| November 12 | Your Compute to Win the LLM Agents MOOC Hackathon - 'Get Started' Demos with Lambda

Event Recording |

| November 21 | Building with Intel: Tiber AI Cloud and Intel Liftoff

Event Recording |

| November 26 | Workshop with Google AI: Building with Gemini for the LLM Agents MOOC Hackathon

Event Recording |

| December 3 | Info Session with Sierra

Event Recording |

JUDGING CRITERIA

Applications Track

Strong submissions will demonstrate novel use cases addressing real-world problems, with seamless integration of the LLM agent into the target domain and intuitive UI/UX. Projects should display strong potential for impact and widespread adoption.

Benchmarks Track

Strong submissions will provide comprehensive, standardized benchmarks with clear evaluation criteria for agent capabilities. Another option is to expand and improve existing benchmarks by generating more high-quality data or curating more accurate examples. Project should enable meaningful cross-agent comparisons and offer insights into efficiency, accuracy, and generalization.

Fundamentals Track

Strong submissions will aim to enhance current LLM agent capabilities (e.g., long-term memory, planning, function calling, tool use, or multi-step reasoning). Projects should contain innovative approaches to solving complex problems autonomously.

Safety Track

Strong submissions will thoroughly define and address high-impact safety risks, proposing effective solutions, frameworks, or protocols. Projects should showcase effectiveness through comprehensive testing, validation, and evaluation.

Decentralized and Multi-Agents Track

Strong submissions will expand on limitations of existing frameworks, offering solutions for improved communication, multi-agent collaboration, and scalability. Projects should address practical challenges of real-world deployment.

SUBMISSION

Project submission will happen through our Google Form. For more information, see the submission requirements. Here are the following items to ensure that your project is accepted:

- Video Presentation - Include a link to your video presentation. It should be no more than 3 minutes. Your video should contain a presentation walking through your project and a recorded demo of how your project works.

- Presentation Slides - Include a link to your presentation slides in PDF format.

- Project Code - Include a Github with an informative README with steps on how to run your project. Externally link any large datasets.

- Documentation - Include project information that describes the findings of your project or how it works.

JUDGES

Dawn Song

UC Berkeley

Xinyun Chen

Google DeepMind

Burak Gokturk

Google

Chi Wang

Google DeepMind

Shunyu Yao

OpenAI

Yuandong Tian

Meta AI

Edwin Arbus

OpenAI

Rahul Unnikrishnan Nair

Intel

Chuan Li

Lambda

Henry Xiao

AMD

Will Lu

Orby AI

Elie Bursztein

Google DeepMind

Soheil Koushan

Anthropic

Shai Limonchik

Anthropic

Caiming Xiong

Salesforce

Jason Wu

Salesforce

Tim Weingarten

Adept AI Labs

Josh Albrecht

Imbue

Rumman Chowdhury

Humane Intelligence

Alok Tongaonkar

Palo Alto Networks

Anand Raghavan

Cisco

Pushkar Nandkar

SambaNova Systems

Chenxi Wang

Rain Capital

Corinne Riley

Greylock Partners

Rajko Radovanovic

a16z

Daniel Miessler

Unsupervised Learning

Julian Stastny

Center on Long Term Risk

Henry Sleight

MATS